This past November I attended iPRES 2015. iPRES is one of the foremost international conferences on digital preservation, and the conference location rotates between North America, Europe, and Asia. iPRES 2015 was hosted by the University of North Carolina at Chapel Hill.

iPRES 2015 was a great opportunity to learn about recent developments in digital preservation research and practice, and to swap stories and ideas with fellow archivists as well as practitioners of many other stripes. The digital preservation community is highly varied and necessarily involves the expertise of multiple professions, and one of the most satisfying elements of my time at iPRES was the chance to look at familiar problems from new angles.

iPRES Amplified

This year, the iPRES Organizing Committee invited conference attendees and those who couldn’t be present in person to participate in the opening and closing sessions via social media, and to communicate throughout the conference using the hashtag #ipres2015. In addition to the participatory opening and closing sessions, many panels, sessions and workshops at iPRES 2015 generated Google Docs that are now publicly available through the iPRES Amplified page.

Session highlights

Sessions covered topics ranging from high-level long-term preservation and storage architectures to the nitty-gritty of preservation tools and workflows. Recently-completed and ongoing projects to keep an eye on include:

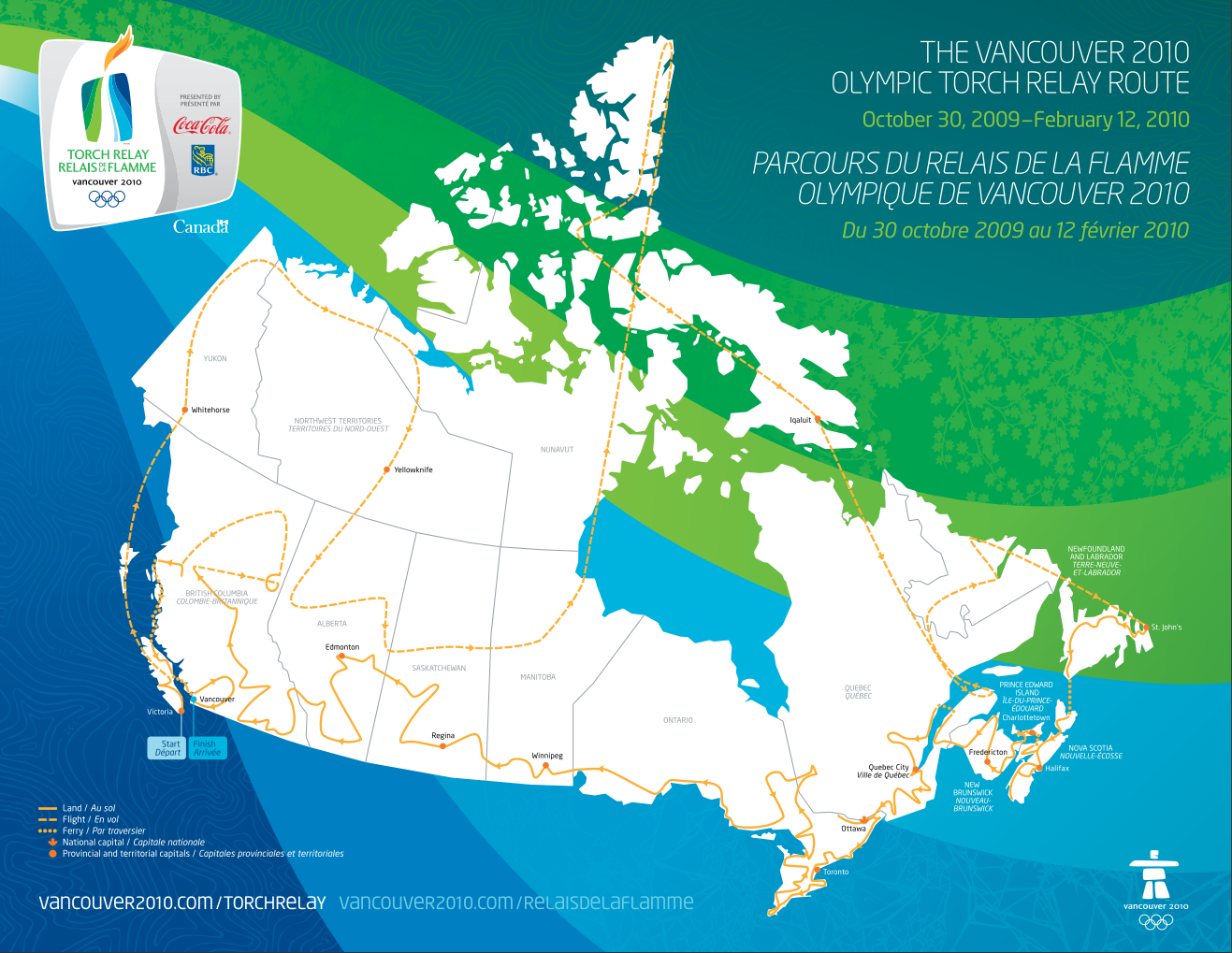

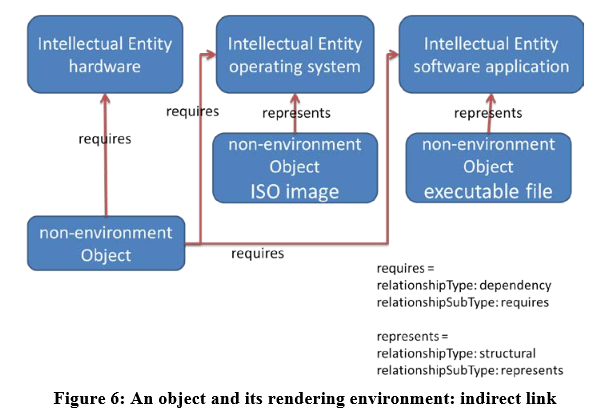

- the release of version 3.0 of the PREMIS Data Dictionary for Preservation Metadata. PREMIS is integral to Archivematica and AtoM, the digital preservation and access systems we use here at the City of Vancouver Archives. PREMIS 3.0 contains some significant changes and additions, the most exciting of which (for me!) is the transformation in the way hardware and software rendering environments are modeled. Without going into too much jargon-laden detail, the changes in environment modeling allow the components of a rendering environment (e.g. an operating system, a software application, a piece of hardware) to be described and preserved independently of the digital content that requires them, and independently of one another.

Going forward, this new approach will facilitate the preservation of rendering environments alongside the records that depend on them – a likely necessity for some types of born-digital records.

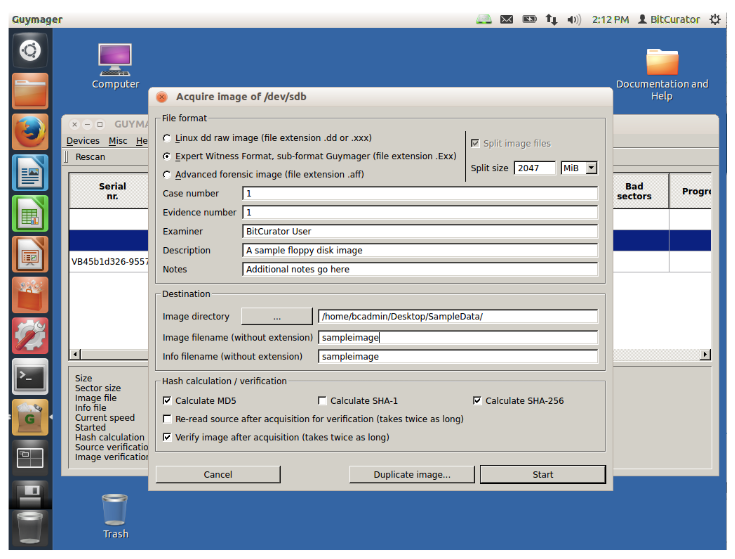

- BitCurator, which is now receiving ongoing community support and development through a consortium that reached 20 members just before iPRES 2015 kicked off. BitCurator began life as a joint project of UNC Chapel Hill’s School of Information and Library Science and the Maryland Institute for Technology in the Humanities, with the goal of developing a system that would incorporate digital forensics tools and methods into archival workflows. Among many other things, digital forensics tools can:

- support processing of born-digital records on legacy media in accordance with the archival principle of original order (keep the records in the order the creator left them);

- help ensure trustworthiness of the records by documenting all actions performed on them before they are ingested into a repository; and

- through the use of software-based write-blockers, reduce the risk of accidental changes to data.

Capturing a disk image in the BitCurator environment. Source: BitCurator Consortium, BitCurator Quick Start Guide The BitCurator project ran from 2011-2014, and produced a freely downloadable, open-source environment, which is now being managed by the BitCurator Consortium in association with the Educopia Institute. I am looking forward to getting my hands dirty (virtually speaking) with BitCurator this year, setting up a BitCurator workstation and developing workflows with our digital archives team.

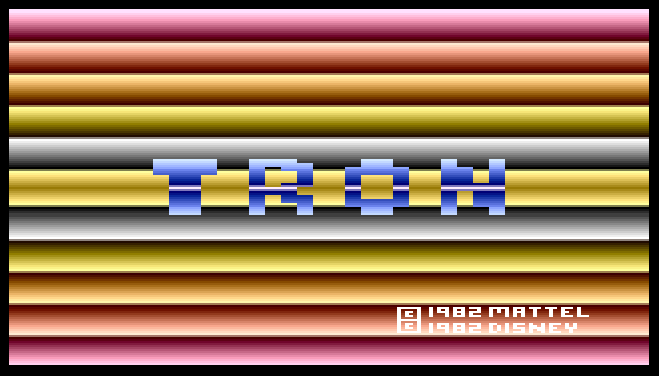

- ongoing research toward increasing the viability of emulation as a preservation strategy. Broadly speaking, emulation refers to the ability of a computer program to behave like another program; usually this means getting a modern environment to behave like an older, obsolete environment. For example, the JSMESS emulator used by the Internet Archive’s Console Living Room provides emulations of many early home video game consoles, allowing you to access and play hundreds of games you may not have seen since 1982, if at all.

The relative merits and drawbacks of emulation versus migration have been vigorously debated for many years; while emulation is valued for preserving the original look and feel of content, it has generally been both very expensive and very complex to provision, and migration – the continual transfer of data to new file formats, and sufficiently expensive and complex in its own right! – is currently the approach taken in the vast majority of institutions. Emulation projects presented include:

- Project EMiL, a joint project of the University of Freiburg, the Karlsruhe University of Arts and Design, the German National Library, and the Bavarian State Library, which focuses on emulation of complex interactive multimedia objects such as internet-based artworks and scientific simulations. EMiL’s goal is to develop a prototype system for provisioning emulated environments that is optimized for the needs of memory institutions. At a session titled “Preservation Strategies and Workflows,” Project EMiL researchers spoke about the challenges involved in determining appropriate emulation environments for CD-ROMs (remember those?) that are already inaccessible through current systems and lack the technical metadata that would be used as guideposts. Their solution involves the development of a CD-ROM characterization tool to ‘guess’ at the most suitable environment based on characteristics of the CD-ROM. If this sounds as exciting to you as it does to me, the full paper can be found here.

- Rhizome and the New Museum’s project to make artist Theresa Duncan’s CD-ROM games from the 1990s playable online through the University of Freiburg’s bwFLA Emulation-as-a-Service (EaaS) platform, which allows a web browser to connect the user to a cloud-based emulated environment. Emulation is particularly valuable for the preservation and continued accessibility of games, where maintaining the look, feel, and behaviour of the original environment is key.

- BitCurator Access, a project undertaken at UNC Chapel Hill following the end of the first BitCurator project. Where the first BitCurator project focused on capture of disk images from legacy media, BitCurator Access is exploring methods for providing web-based and local access to the extracted disk images, and will include the bwFLA Emulation-as-a-Service (EaaS) platform mentioned above in its assessment.

Post-conference workshop on open-source digital preservation tools and workflows

The day after the official close of the conference, I attended a full-day workshop where participants (most of whom were already open-source users, like us) had the opportunity to share their experiences with various open-source tools, discuss the opportunities and challenges associated with going open-source, identify gaps among existing tools, and brainstorm on wish-lists for tool integrations and features. A number of common themes emerged, most of which were familiar to me from my own work. Some of these were:

- the current gap in tool integrations and workflows for the “pre-ingest” phase of processing digital records. Taken broadly, pre-ingest refers to all the work done from the time of transfer by a donor to the time the records are ingested into the digital preservation system (for us, that’s Archivematica). This work can include performing fixity checks on received content to ensure files were not corrupted during transfer, performing archival appraisal (determining what will be kept and what will not), and analysis of file formats present to inform workflow decisions.

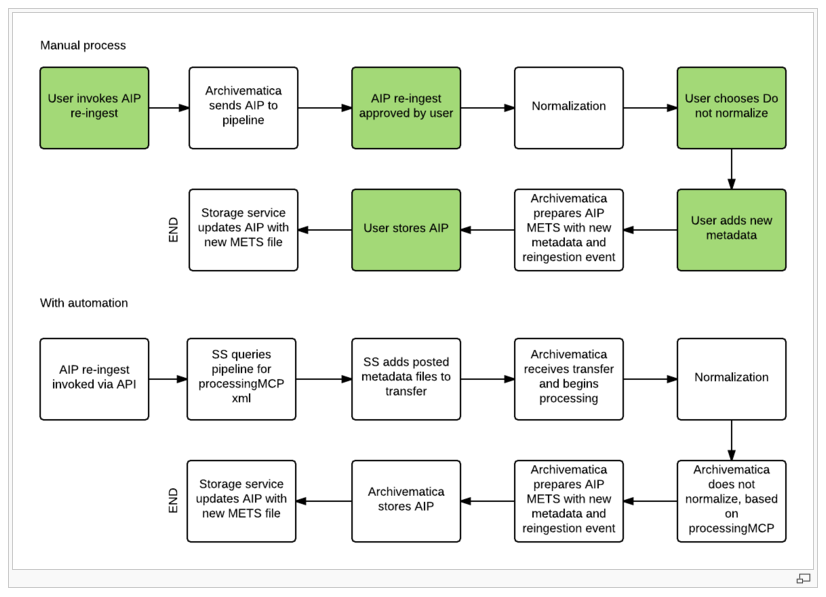

- the need to re-ingest archival information packages (the ‘preservation master’ files and associated metadata) when updates are made to the metadata. Happily for Archivematica users, lead developers Artefactual will be rolling out support for re-ingest with the release of Archivematica 1.5.0.

- the open-source digital preservation community is full of dedicated, generous, collaborative folk, and the need for non-developers to participate in projects through, for example, contribution of use cases, documentation, and bug reports was continually emphasized. A diversity of contributions from different sizes and types of institutions can encourage development of scalable tools and systems, and mitigate the possibility that projects only suit one segment of the community.

The chance to assess as a group the current state of open-source digital preservation was a great conclusion to my conference week. For those interested in looking under the hood, the collaboratively-edited workshop notes are available in the Workshops & Tutorials folder in the iPRES 2015 Google Drive.

Digital preservation is, like all technology-heavy fields, given to rapid change and iterative refinement of processes, and keeping current is a necessity for practitioners like me. iPRES 2015 left me feeling refreshed and inspired to tackle some the City’s digital records coming my way in 2016. Thanks to the conference organizers for a great event!